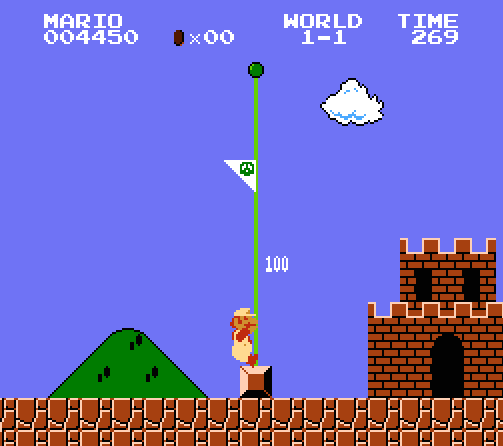

Sprint 4 - 2D platformer completed first level

Due: BEFORE Nov. 09 11:59:59 pm:

Group Team Rubrics (Word) (PDF): Nov. 09 via email.

Objectives:

- Finish any incomplete stories (now Required stories to be completed in Sprint 4) from Sprints 1, 2 and 3.

- Add the Heads Up Display (the font does not need to match the one used in the original game).

- Add sounds.

- Add points and the score system, make sure you get extra points for jumping on enemies without hitting the ground.

- Add coins, the coin system, and lives.

- Implement game state transitions:

- The remaining lives screen before/after deaths

- Warp pipe transition to secret underground room (and coming back up again)

- Pausing

- The winning game state and flagpole animation when Mario reaches the end of the level

- Implement any other features you deem necessary for copying gameplay on level 1-1.

- Remove all magic numbers and strings by making utility classes (and optionally inputing their values from a file).

- Begin research and planning implementation ideas for Sprint 5.

Sprint 4 Responsibilities:

- DevOps - Scrum Tracking Task Status and Remaining Work (20 points):

- Add dates for Sprint 4 (10/27 - 11/09).

- Move any incomplete (Not marked Done) stories (with associated tasks) from Sprints 1, 2 and 3 to Sprint 4.

- Using PBI stories provided to you (Mario PBIs), add several product backlog items for the requirements for this sprint and assign them to Sprint 4.

Sprint 4 PBI

- 32 - Player Feedback in a Transparent Non-Scrolling Layer

- 33 - Collecting Points through Collisions

- 34 - Collecting Points through Finishing the Level

- 35 - Collecting Coins (and extra life?) through collisions

- 36 - Player "Hit" tracking

- 37 - Time Limit as a Bonus or defeat

- 38 - Level Soundtrack (Background Music)

- 39 - Event Sound Effects

- 40 - Level Restart

- 41 - Game Over "Splash Screen"

- 42 - Pausing Game Play/Resume

- 43 - Winning Game State

- 45 - Warp Pipe (Hiding Place for Enemies and Items)

- 46 - Warp Pipe (Warping)

- 47 - Secret, Hidden Area

- Add tasks, with an effort amount, under each backlog item. Be sure each task is assigned to someone. Finish adding tasks before class on 10/29.

- Grading will be based on:

- Completeness of tasks based on covering the requirements (stories) for the sprint. This is worth 10% of the points for this sprint. I'm reviewing Tasks for Quality of Content. Tasks should describe the HOW from the Assignees perspective to accomplish the WHAT fromthe related Story (PBI) (10 points)

- Did everyone have a task(s) assigned to them and track progress over the course of the sprint - you lose points when I can tell from reviewing history that the Work Remaining goes from 0 to N and back to 0 in time less than it took to reasonably accomplish the task (5 points)

- Quality of the Velocity and Burndown charts at the end of the sprint (5 points).

- During the Sprint:

- Each team member should implement the tasks assigned to them.

- When you sit down to work on this sprint, drag the task you are going to work on to in-progress on the board.

- If you finished the task during that session, Update Work Remaining to 0, drag it to Done and check your work into DevOps.

- If you did not finish, but it compiles, Update the Remaining Work for the task and check your work into DevOps.

- If it is not finished and does not compile, Update the Remaining Work for the task and make sure your work is saved (or shelved).

- Sprint 4 Release (80 points):

- Run Code Analysis on your solution and fix any reported warnings or errors. For warnings you do not wish to fix, provide justification in the readme file. More details on this are posted below the grading outline.

- You should place a high priority on code quality for maintenance and flexibility of the system. For the file you performed a readability review on for the initial implementation, you should now do a code review. Discuss code quality in terms of metrics discussed in class and come up with constructive criticism to improve the code quality. More details on how to do code reviews are posted below the grading outline.

- Grading will be based on:

- the amount of features and the level of difficulty in implementing them (15 points)

- implementation quality and correctness - how much was completed and does it work (50 points)

- amount of Code Analysis warnings (5 points)

- did everyone contribute to the implementation (5 points)

- did everyone contribute to code reviews on code quality (5 points)

Work to turn in:

- Do a Build->Clean Solution in Visual Studio and without rebuilding, create a .zip of your Entire project directory (Make absolutely certain your .zip contains the .sln and the Content hierarchy).

- Next perform a Build in Visual Studio; you will now add the bin directory (Debug or Release) and its contents to the zip file. Make SURE you independently verify the bin folder is complete and your submission will RUN!

- Upload the .zip using Carmen under the dropbox for Sprint 4.

- The dropbox is set up to accept multiple submissions, only the latest submission before the deadline will be graded.

- Please let the instructor know if you have any difficulties submitting your work.

Grading:

See above. In addition, your individual scores will be weighted based on the team rubrics and activity in DevOps.

Notes on grading of sprints

Sprint RubricAdditional information - same as in earlier sprints

On code reviews:

In the root folder of the project, add a folder to store code reviews. You can add plaintext files to the project in this folder by going to the Project menu, add new item, and select text file under the general option.

Each of the submitted code reviews must contain commentary on code for the Current Sprint. The expectation is not for you to "go through the exercise simply checking the boxes". The expectation is you invest time to review the current Sprints code contributions to identify potential problem areas for future improvement.

In the plaintext file for a code quality review, include the following information in this exact order:

- Author of the code review

- Date of the code review

- Sprint number

- Name of the .cs file being reviewed

- Author of the .cs file being reviewed

- Specific comments on code quality

- Number of minutes taken to complete the review

- A hypothetical change to make to the game related to file being reviewed and how the current implementation could or could not easily support that change

On refactoring:

You should be using some of the features under the analyze tab in Visual Studio. Calculating Code Metrics will give you some idea on the general quality of the code, and running Code Analysis will give you specific lines of code to change to fit style guidelines. Configure Code Analysis to use “Microsoft Basic Design Guideline Rules”. If you are having trouble figuring out how to use the Code Analysis tools, Microsoft offers a lab to help learn it here. Alternatively you can use this document or this tutorial video. Fix as many code analysis errors as possible, if fixing one forces a design change that your team does not have time to complete, document it in a readme file placed near the root folder of the project. You should also have a readme to document user input if the suggested controls above are not used.